Archive for the ‘Express 188’ Category

Profile: John Cook

Dedicating his career to dispelling myths and misunderstanding of climate change is John Cook, through his website Skeptical Science. As the Climate Communication Fellow for the Global Change Institute at the University of Queensland, he is in the perfect position to address doubts of climate change by making the findings of research papers easily accessible and understandable by the lay person. This role could not be more important today, as opinions are increasingly polarised. Read more

Making Sense of Sensitivity … and Keeping It in Perspective

Posted on 28 March 2013 by dana1981

Yesterday The Economist published an article about climate sensitivity – how much the planet’s surface will warm in response to the increased greenhouse effect from a doubling of atmospheric CO2, including amplifying and dampening feedbacks. For the most part the article was well-researched, with the exception of a few errors, like calling financier Nic Lewis “an independent climate scientist.” The main shortcomings in the article lie in its interpretation of the research that it presented.

For example, the article focused heavily on the slowed global surface warming over the past decade, and a few studies which, based on that slowed surface warming, have concluded that climate sensitivity is relatively low. However, as we have discussed on Skeptical Science, those estimates do not include the accelerated warming of the deeper oceans over the past decade, and they appear to be overly sensitive to short-term natural variability. The Economist article touched only briefly on the accelerated deep ocean warming, and oddly seemed to dismiss this data as “obscure.”

The Economist article also referenced the circular Tung and Zhou (2013) paper we addressed here, and suggested that if equilibrium climate sensitivity is 2°C to a doubling of CO2, we might be better off adapting to rather than trying to mitigate climate change. Unfortunately, as we discussed here, even a 2°C sensitivity would set us on a path for very dangerous climate change unless we take serious steps to reduce our greenhouse gas emissions.

Ultimately it was rather strange to see such a complex technical subject as climate sensitivity tackled in a business-related publication. While The Economist made a good effort at the topic, their lack of expertise showed.

For a more expert take on climate sensitivity, we re-post here an article published by Zeke Hausfather at the Yale Forum on Climate Change & the Media.

Climate sensitivity is suddenly a hot topic.

Some commenters skeptical of the severity of projected climate change have recently seized on two sources to argue that the climate may be less sensitive than many scientists say and the impacts of climate change therefore less serious: A yet-to-be-published study from Norwegian researchers, and remarks by James Annan, a climate scientist with the Japan Agency for Marine-Earth Science and Technology (JAMSTEC).

While the points skeptics are making significantly overstate their case, a look at recent developments in estimates of climate sensitivity may help provide a better estimate of future warming. These estimates are critical, as climate sensitivity will be one of the main factors determining how much warming the world experiences during the 21st century.

Climate sensitivity is an important and often poorly understood concept. Put simply, it is usually defined as the amount of global surface warming that will occur when atmospheric CO2 concentrations double. These estimates have proven remarkably stable over time, generally falling in the range of 1.5 to 4.5 degrees C per doubling of CO2.* Using its established terminology, IPCC in its Fourth Assessment Report slightly narrowed this range, arguing that climate sensitivity was “likely” between 2 C to 4.5 C, and that it was “very likely” more than 1.5 C.

The wide range of estimates of climate sensitivity is attributable to uncertainties about the magnitude of climate feedbacks (e.g., water vapor, clouds, and albedo). Those estimates also reflect uncertainties involving changes in temperature and forcing in the distant past. But based on the radiative properties, there is broad agreement that, all things being equal, a doubling of CO2 will yield a temperature increase of a bit more than 1 C if feedbacks are ignored. However, it is known from estimates of past climate changes and from atmospheric physics-based models that Earth’s climate is more sensitive than that. A prime example: Small perturbations in orbital forcings resulting in vast ice ages could not have occurred without strong feedbacks.

About Skeptical Science

The goal of Skeptical Science is to explain what peer reviewed science has to say about global warming. When you peruse the many arguments of global warming skeptics, a pattern emerges. Skeptic arguments tend to focus on narrow pieces of the puzzle while neglecting the broader picture. For example, focus on Climategate emails neglects the full weight of scientific evidence for man-made global warming. Concentrating on a few growing glaciers ignores the world wide trend of accelerating glacier shrinkage. Claims of global cooling fail to realise the planet as a whole is still accumulating heat. This website presents the broader picture by explaining the peer reviewed scientific literature.

Often, the reason for disbelieving in man-made global warming seem to be political rather than scientific. Eg – “it’s all a liberal plot to spread socialism and destroy capitalism”. As one person put it, “the cheerleaders for doing something about global warming seem to be largely the cheerleaders for many causes of which I disapprove”. However, what is causing global warming is a purely scientific question. Skeptical Science removes the politics from the debate by concentrating solely on the science.

About the author

Skeptical Science is maintained by John Cook, the Climate Communication Fellow for the Global Change Institute at the University of Queensland. He studied physics at the University of Queensland, Australia. After graduating, he majored in solar physics in his post-grad honours year. He is not a climate scientist. Consequently, the science presented on Skeptical Science is not his own but taken directly from the peer reviewed scientific literature. To those seeking to refute the science presented, one needs to address the peer reviewed papers where the science comes from (links to the full papers are provided whenever possible).

There is no funding to maintain Skeptical Science other than Paypal donations – it’s run at personal expense. John Cook has no affiliations with any organisations or political groups. Skeptical Science is strictly a labour of love. The design was created by John’s talented web designer wife.

Source: www.skepticalscience.com

What a Brilliant Idea!Take long term view

The threat of climate change is not one that can be addressed with just technological developments – economics and finance are also needed to provide a more holistic approach to the problem. In an interview Larry Brilliant, CEO for Skoll Global Threats Fund, issued a call for business owners to adopt a long-term view of threats to the global economy, and for more investments in clean technology. These investments may just come from what are currently fossil fuel subsidies, as the WWF urges governments to convert billions of dollars into investments in clean, renewable energy. Read more

JOURNAL REPORTS (30 March 2013):

Climate Change Is the Risk That Increases All Others

Larry Brilliant is president and CEO of Skoll Global Threats Fund, an organization devoted to building alliances and finding solutions to some of the world’s most pressing problems.

Climate change is the greatest risk we face. It’s the great exacerbater. It exacerbates the risk of pandemics. It exacerbates the risks of water. It exacerbates the risk of conflict. Take a look at South Asia, where China owns the ice, India owns the water and has 21 dams, and Pakistan and Bangladesh are out of luck. Pakistan’s entire food production is dependent on two other nuclear-armed countries.

On CEO priorities

Joe White, Senior Editor for The Wall Street Journal, talks with Larry Brilliant, CEO for Skoll Global Threats Fund, about how innovators are working to answer some of the world’s most complex social challenges.

I was the CEO of two public companies. When I’m looking at quarterly profits, I start working on the next 90 days before this 90 days is over. How can I look up, in all honesty, and say, “There’s an odorless, colorless, tasteless, invisible gas that’s going to destroy the world 20 years from now”?

Our structure is wrong. The incentive system is misaligned with having these powerful, strong, smart CEOs focus on the issues ahead.

Climate change has an extremely high probability if you’re looking at a 20-year time frame. But in a quarter, it has a low probability.

On what’s holding back clean-energy investment

When Steve Jobs got his genome sequenced, it was $100,000. Within five years, it’ll be $100 or $200. If I was a businessperson, I would say, “There’s a tremendous amount of money to be made in the combination of big data, epidemiological analysis and what’s going to be a treasure trove of data from genomic sequencing.” Who’s going to be trying to find the new drugs? Who’s going to try to find the effect of drugs already on genes? That’s the place that I would be looking as an investor. Not climate.

We’re truly not going to make a huge change [on attacking global warming] unless we have a price on carbon. Until there’s a price on carbon and entrepreneurs know what to aim at and companies know what to produce, we’re not going to make that huge progress that we need.

A version of this article appeared March 26, 2013, on page R2 in the U.S. edition of The Wall Street Journal, with the headline: Climate Change Is the Risk That Increases All Others.

Source: www.online.wsj.com

Global Fossil Fuel Subsidies Must Be Transformed into Financing for Energy Efficiency and Renewable Energy

In AZoCleantech.com (30 March 2013):

AZoCleantech.com is brought to you by AZoNetwork, the leading online science, engineering and medical publisher.

The continued maintenance of fossil fuel subsidies is a global scandal and governments should work to transform these subsidies into financing for energy efficiency and renewable energy, says WWF, responding to a report released today by the International Monetary Fund (IMF).

The International Monetary Fund (IMF) report Energy Subsidy Reform: Lessons and Implications shines a much needed light on the dark side of fossil fuel subsidies.

The IMF assessment shows that global fossil fuel subsidies – including carbon pollution impacts from fossil fuels – account for almost 9% of all annual country budgets – amounting to a staggering $US 1.9 trillion, much higher than previously estimated. And importantly, says WWF Global Climate & Energy Initiative leader Samantha Smith, the report confirms that the poorest 20% of developing countries only marginally benefit from energy subsidies.

“Removing these subsidies would reduce carbon pollution by 13%. This would be a major step towards reducing the world’s carbon footprint. Maintenance of these subsidies is a global scandal, a crime against the environment and an active instrument against clean energy and technological innovation. We strongly support transforming fossil fuel subsidies into an effective scheme for financing energy efficiency and renewables and making sure that the poor in developing countries benefit appropriately and receive clean, affordable and reliable energy,” she says.

The IMF findings show that almost half of fossil fuel subsidies occur in OECD nations. The US, with about $US 500 billion annually, accounts for more than one quarter of all global fossil fuel subsidies, followed by China with almost $US 300 billion and Russia ($US 115 billion).

WWF Global Energy Policy Director Stephan Singer says industrialised countries are responsible for the lion’s share of fossil fuel subsidies and should act now to stop them. “If they were to abolish those subsidies and reform towards renewables and energy efficiency investments, it would more than triple present global investment into renewables,” he says. “And that is what is needed for a world powered by 100% sustainable renewables.”

Source: http://www.wwf.ca/ and http://www.azocleantech.com

Climate sensitivity rears its worrying head

Despite adding about 100 billion tonnes of carbon to the atmosphere over the last decade, mean global temperature has remained unchanged. The Economist magazine has served up this in its latest issue. For climate scientists this could mean the climate is less sensitive towards changes in carbon dioxide levels. However, regardless of the climate’s sensitivity, the continued pumping of carbon dioxide into the atmosphere is expected to cause an increase in global temperature. Best be prepared for the consequences that follow. Read more

Climate science

A sensitive matter

The climate may be heating up less in response to greenhouse-gas emissions than was once thought. But that does not mean the problem is going away

The Economist (30 March 2013):

OVER the past 15 years air temperatures at the Earth’s surface have been flat while greenhouse-gas emissions have continued to soar. The world added roughly 100 billion tonnes of carbon to the atmosphere between 2000 and 2010. That is about a quarter of all the CO₂ put there by humanity since 1750. And yet, as James Hansen, the head of NASA’s Goddard Institute for Space Studies, observes, “the five-year mean global temperature has been flat for a decade.”

Temperatures fluctuate over short periods, but this lack of new warming is a surprise. Ed Hawkins, of the University of Reading, in Britain, points out that surface temperatures since 2005 are already at the low end of the range of projections derived from 20 climate models. If they remain flat, they will fall outside the models’ range within a few years.

The mismatch between rising greenhouse-gas emissions and not-rising temperatures is among the biggest puzzles in climate science just now. It does not mean global warming is a delusion. Flat though they are, temperatures in the first decade of the 21st century remain almost 1°C above their level in the first decade of the 20th. But the puzzle does need explaining.

The mismatch might mean that—for some unexplained reason—there has been a temporary lag between more carbon dioxide and higher temperatures in 2000-10. Or it might be that the 1990s, when temperatures were rising fast, was the anomalous period. Or, as an increasing body of research is suggesting, it may be that the climate is responding to higher concentrations of carbon dioxide in ways that had not been properly understood before. This possibility, if true, could have profound significance both for climate science and for environmental and social policy.

The insensitive planet

The term scientists use to describe the way the climate reacts to changes in carbon-dioxide levels is “climate sensitivity”. This is usually defined as how much hotter the Earth will get for each doubling of CO₂ concentrations. So-called equilibrium sensitivity, the commonest measure, refers to the temperature rise after allowing all feedback mechanisms to work (but without accounting for changes in vegetation and ice sheets).

Carbon dioxide itself absorbs infra-red at a consistent rate. For each doubling of CO₂ levels you get roughly 1°C of warming. A rise in concentrations from preindustrial levels of 280 parts per million (ppm) to 560ppm would thus warm the Earth by 1°C. If that were all there was to worry about, there would, as it were, be nothing to worry about. A 1°C rise could be shrugged off. But things are not that simple, for two reasons. One is that rising CO₂ levels directly influence phenomena such as the amount of water vapour (also a greenhouse gas) and clouds that amplify or diminish the temperature rise. This affects equilibrium sensitivity directly, meaning doubling carbon concentrations would produce more than a 1°C rise in temperature. The second is that other things, such as adding soot and other aerosols to the atmosphere, add to or subtract from the effect of CO₂. All serious climate scientists agree on these two lines of reasoning. But they disagree on the size of the change that is predicted.

The Intergovernmental Panel on Climate Change (IPCC), which embodies the mainstream of climate science, reckons the answer is about 3°C, plus or minus a degree or so. In its most recent assessment (in 2007), it wrote that “the equilibrium climate sensitivity…is likely to be in the range 2°C to 4.5°C with a best estimate of about 3°C and is very unlikely to be less than 1.5°C. Values higher than 4.5°C cannot be excluded.” The IPCC’s next assessment is due in September. A draft version was recently leaked. It gave the same range of likely outcomes and added an upper limit of sensitivity of 6°C to 7°C.

A rise of around 3°C could be extremely damaging. The IPCC’s earlier assessment said such a rise could mean that more areas would be affected by drought; that up to 30% of species could be at greater risk of extinction; that most corals would face significant biodiversity losses; and that there would be likely increases of intense tropical cyclones and much higher sea levels.

New Model Army

Other recent studies, though, paint a different picture. An unpublished report by the Research Council of Norway, a government-funded body, which was compiled by a team led by Terje Berntsen of the University of Oslo, uses a different method from the IPCC’s. It concludes there is a 90% probability that doubling CO₂ emissions will increase temperatures by only 1.2-2.9°C, with the most likely figure being 1.9°C. The top of the study’s range is well below the IPCC’s upper estimates of likely sensitivity.

This study has not been peer-reviewed; it may be unreliable. But its projections are not unique. Work by Julia Hargreaves of the Research Institute for Global Change in Yokohama, which was published in 2012, suggests a 90% chance of the actual change being in the range of 0.5-4.0°C, with a mean of 2.3°C. This is based on the way the climate behaved about 20,000 years ago, at the peak of the last ice age, a period when carbon-dioxide concentrations leapt. Nic Lewis, an independent climate scientist, got an even lower range in a study accepted for publication: 1.0-3.0°C, with a mean of 1.6°C. His calculations reanalysed work cited by the IPCC and took account of more recent temperature data. In all these calculations, the chances of climate sensitivity above 4.5°C become vanishingly small.

If such estimates were right, they would require revisions to the science of climate change and, possibly, to public policies. If, as conventional wisdom has it, global temperatures could rise by 3°C or more in response to a doubling of emissions, then the correct response would be the one to which most of the world pays lip service: rein in the warming and the greenhouse gases causing it. This is called “mitigation”, in the jargon. Moreover, if there were an outside possibility of something catastrophic, such as a 6°C rise, that could justify drastic interventions. This would be similar to taking out disaster insurance. It may seem an unnecessary expense when you are forking out for the premiums, but when you need it, you really need it. Many economists, including William Nordhaus of Yale University, have made this case.

If, however, temperatures are likely to rise by only 2°C in response to a doubling of carbon emissions (and if the likelihood of a 6°C increase is trivial), the calculation might change. Perhaps the world should seek to adjust to (rather than stop) the greenhouse-gas splurge. There is no point buying earthquake insurance if you do not live in an earthquake zone. In this case more adaptation rather than more mitigation might be the right policy at the margin. But that would be good advice only if these new estimates really were more reliable than the old ones. And different results come from different models.

One type of model—general-circulation models, or GCMs—use a bottom-up approach. These divide the Earth and its atmosphere into a grid which generates an enormous number of calculations in order to imitate the climate system and the multiple influences upon it. The advantage of such complex models is that they are extremely detailed. Their disadvantage is that they do not respond to new temperature readings. They simulate the way the climate works over the long run, without taking account of what current observations are. Their sensitivity is based upon how accurately they describe the processes and feedbacks in the climate system.

The other type—energy-balance models—are simpler. They are top-down, treating the Earth as a single unit or as two hemispheres, and representing the whole climate with a few equations reflecting things such as changes in greenhouse gases, volcanic aerosols and global temperatures. Such models do not try to describe the complexities of the climate. That is a drawback. But they have an advantage, too: unlike the GCMs, they explicitly use temperature data to estimate the sensitivity of the climate system, so they respond to actual climate observations.

The IPCC’s estimates of climate sensitivity are based partly on GCMs. Because these reflect scientists’ understanding of how the climate works, and that understanding has not changed much, the models have not changed either and do not reflect the recent hiatus in rising temperatures. In contrast, the Norwegian study was based on an energy-balance model. So were earlier influential ones by Reto Knutti of the Institute for Atmospheric and Climate Science in Zurich; by Piers Forster of the University of Leeds and Jonathan Gregory of the University of Reading; by Natalia Andronova and Michael Schlesinger, both of the University of Illinois; and by Magne Aldrin of the Norwegian Computing Centre (who is also a co-author of the new Norwegian study). All these found lower climate sensitivities. The paper by Drs Forster and Gregory found a central estimate of 1.6°C for equilibrium sensitivity, with a 95% likelihood of a 1.0-4.1°C range. That by Dr Aldrin and others found a 90% likelihood of a 1.2-3.5°C range.

It might seem obvious that energy-balance models are better: do they not fit what is actually happening? Yes, but that is not the whole story. Myles Allen of Oxford University points out that energy-balance models are better at representing simple and direct climate feedback mechanisms than indirect and dynamic ones. Most greenhouse gases are straightforward: they warm the climate. The direct impact of volcanoes is also straightforward: they cool it by reflecting sunlight back. But volcanoes also change circulation patterns in the atmosphere, which can then warm the climate indirectly, partially offsetting the direct cooling. Simple energy-balance models cannot capture this indirect feedback. So they may exaggerate volcanic cooling.

This means that if, for some reason, there were factors that temporarily muffled the impact of greenhouse-gas emissions on global temperatures, the simple energy-balance models might not pick them up. They will be too responsive to passing slowdowns. In short, the different sorts of climate model measure somewhat different things.

Clouds of uncertainty

This also means the case for saying the climate is less sensitive to CO₂ emissions than previously believed cannot rest on models alone. There must be other explanations—and, as it happens, there are: individual climatic influences and feedback loops that amplify (and sometimes moderate) climate change.

Begin with aerosols, such as those from sulphates. These stop the atmosphere from warming by reflecting sunlight. Some heat it, too. But on balance aerosols offset the warming impact of carbon dioxide and other greenhouse gases. Most climate models reckon that aerosols cool the atmosphere by about 0.3-0.5°C. If that underestimated aerosols’ effects, perhaps it might explain the lack of recent warming.

Yet it does not. In fact, it may actually be an overestimate. Over the past few years, measurements of aerosols have improved enormously. Detailed data from satellites and balloons suggest their cooling effect is lower (and their warming greater, where that occurs). The leaked assessment from the IPCC (which is still subject to review and revision) suggested that aerosols’ estimated radiative “forcing”—their warming or cooling effect—had changed from minus 1.2 watts per square metre of the Earth’s surface in the 2007 assessment to minus 0.7W/m ² now: ie, less cooling.

One of the commonest and most important aerosols is soot (also known as black carbon). This warms the atmosphere because it absorbs sunlight, as black things do. The most detailed study of soot was published in January and also found more net warming than had previously been thought. It reckoned black carbon had a direct warming effect of around 1.1W/m ². Though indirect effects offset some of this, the effect is still greater than an earlier estimate by the United Nations Environment Programme of 0.3-0.6W/m ².

All this makes the recent period of flat temperatures even more puzzling. If aerosols are not cooling the Earth as much as was thought, then global warming ought to be gathering pace. But it is not. Something must be reining it back. One candidate is lower climate sensitivity.

A related possibility is that general-circulation climate models may be overestimating the impact of clouds (which are themselves influenced by aerosols). In all such models, clouds amplify global warming, sometimes by a lot. But as the leaked IPCC assessment says, “the cloud feedback remains the most uncertain radiative feedback in climate models.” It is even possible that some clouds may dampen, not amplify global warming—which may also help explain the hiatus in rising temperatures. If clouds have less of an effect, climate sensitivity would be lower.

So the explanation may lie in the air—but then again it may not. Perhaps it lies in the oceans. But here, too, facts get in the way. Over the past decade the long-term rise in surface seawater temperatures seems to have stalled (see chart 2), which suggests that the oceans are not absorbing as much heat from the atmosphere.

As with aerosols, this conclusion is based on better data from new measuring devices. But it applies only to the upper 700 metres of the sea. What is going on below that—particularly at depths of 2km or more—is obscure. A study in Geophysical Research Letters by Kevin Trenberth of America’s National Centre for Atmospheric Research and others found that 30% of the ocean warming in the past decade has occurred in the deep ocean (below 700 metres). The study says a substantial amount of global warming is going into the oceans, and the deep oceans are heating up in an unprecedented way. If so, that would also help explain the temperature hiatus.

Double-A minus

Lastly, there is some evidence that the natural (ie, non-man-made) variability of temperatures may be somewhat greater than the IPCC has thought. A recent paper by Ka-Kit Tung and Jiansong Zhou in the Proceedings of the National Academy of Sciences links temperature changes from 1750 to natural changes (such as sea temperatures in the Atlantic Ocean) and suggests that “the anthropogenic global-warming trends might have been overestimated by a factor of two in the second half of the 20th century.” It is possible, therefore, that both the rise in temperatures in the 1990s and the flattening in the 2000s have been caused in part by natural variability.

So what does all this amount to? The scientists are cautious about interpreting their findings. As Dr Knutti puts it, “the bottom line is that there are several lines of evidence, where the observed trends are pushing down, whereas the models are pushing up, so my personal view is that the overall assessment hasn’t changed much.”

But given the hiatus in warming and all the new evidence, a small reduction in estimates of climate sensitivity would seem to be justified: a downwards nudge on various best estimates from 3°C to 2.5°C, perhaps; a lower ceiling (around 4.5°C), certainly. If climate scientists were credit-rating agencies, climate sensitivity would be on negative watch. But it would not yet be downgraded.

Equilibrium climate sensitivity is a benchmark in climate science. But it is a very specific measure. It attempts to describe what would happen to the climate once all the feedback mechanisms have worked through; equilibrium in this sense takes centuries—too long for most policymakers. As Gerard Roe of the University of Washington argues, even if climate sensitivity were as high as the IPCC suggests, its effects would be minuscule under any plausible discount rate because it operates over such long periods. So it is one thing to ask how climate sensitivity might be changing; a different question is to ask what the policy consequences might be.

For that, a more useful measure is the transient climate response (TCR), the temperature you reach after doubling CO₂ gradually over 70 years. Unlike the equilibrium response, the transient one can be observed directly; there is much less controversy about it. Most estimates put the TCR at about 1.5°C, with a range of 1-2°C. Isaac Held of America’s National Oceanic and Atmospheric Administration recently calculated his “personal best estimate” for the TCR: 1.4°C, reflecting the new estimates for aerosols and natural variability.

That sounds reassuring: the TCR is below estimates for equilibrium climate sensitivity. But the TCR captures only some of the warming that those 70 years of emissions would eventually generate because carbon dioxide stays in the atmosphere for much longer.

As a rule of thumb, global temperatures rise by about 1.5°C for each trillion tonnes of carbon put into the atmosphere. The world has pumped out half a trillion tonnes of carbon since 1750, and temperatures have risen by 0.8°C. At current rates, the next half-trillion tonnes will be emitted by 2045; the one after that before 2080.

Since CO₂ accumulates in the atmosphere, this could increase temperatures compared with pre-industrial levels by around 2°C even with a lower sensitivity and perhaps nearer to 4°C at the top end of the estimates. Despite all the work on sensitivity, no one really knows how the climate would react if temperatures rose by as much as 4°C. Hardly reassuring.

Source: http://www.economist.com

Is Asia leading the way to a clean energy revolution?

Flying under the radar thus far, the energy revolution in Asia has changed the way countries here are powering their economies and communities. Adding renewable energy capacities at a breakneck pace, countries such as Japan, China, and India, can serve as models for the United States and other nations to shift towards non-polluting, sustainable energy as envisioned by the Rocky Mountain Institute’s Reinventing Fire. Read more

Asia’s Accelerating Energy Revolution

By Amory B. Lovins, Cofounder, Chairman and Chief Scientist, Rocky Mountain Institute (26 March 2013):

In late 2012, RMI’s cofounder, chairman, and chief scientist Amory Lovins spent seven weeks in Japan, China, India, Indonesia, and Singapore observing Asia’s emerging green energy revolution. In February 2013, he returned to Japan and China. Japan, China, and India—all vulnerable to climate change—turned out to be in different stages of a “shared and massive shift” to a green energy future, one with remarkable similarities to RMI’s Reinventing Fire vision for the United States.

Largely unnoticed in the West, Asia’s energy revolution is gathering speed. It’s driven by the same economic and strategic logic that Reinventing Fire showed could profitably shift the United States from fossil-fuel-based and nuclear energy to three-times-more-efficient use and three-fourths renewables by 2050.

Renewable energy now provides one-fifth of the world’s electricity and has added about half of the world’s new generating capacity each year since 2008. Excluding big hydro dams, renewables got $250 billion in private investment in 2011 alone, adding 84 GW, according to Bloomberg New Energy Finance and ren21.net. The results were similar in 2012.

While RMI explores how key partners could apply our U.S. synthesis to other countries, including China, revolutionary shifts—strikingly parallel to our approach—are already emerging in the three biggest Asian economies: Japan, China, and India. They add strong reasons to expect the already-underway renewable revolution to scale even further and faster.

Japan Awakens

After world-leading energy efficiency gains in the 1970s, Japan’s energy kaizen stagnated. Japanese industry remains among the most efficient of 11 major industrial nations, but Japan now ranks tenth among them in industrial cogeneration and commercial building efficiency, eighth in truck efficiency, and ties with the U.S. for next-to-last in car efficiency. With such low efficiencies and very high energy prices—far higher for electricity than in a more competitive market structure, while gas prices are historically linked to oil prices—fixing these inefficiencies can be stunningly profitable. For example, retrofitting semiconductor company Rohm’s Japan head office in front of the Kyoto railway station—even without using superwindows as RMI did in the Empire State Building retrofit—saved even more energy (44 percent) with a faster payback (two years).

As the debate triggered by the Fukushima disaster opens up a profound public energy conversation, Japan is starting to see those tremendous buildings efficiency opportunities—and to realize that it is the richest in renewable energy (wind, solar, and marine in particular) of any major industrial country. Japan has twice the per-hectare high-quality renewable potential of North America, three times that of Europe, and nine times that of Germany. Yet Japan’s renewable share of electricity generation is one-ninth that of Germany—so its renewable power exploitation is exactly the opposite of its relative endowment!

Why such poor renewable generation and modest ambition despite such rich local resources? Answer: because Japan is in a race with Chile to be the last OECD country to establish an independent grid operator bringing supply competition and transparent pricing to the old geographic utility monopolies.

But that is changing; Japan’s once-monolithic business support for utility monopolies-cum-monopsonies (one seller, one buyer) is eroding. Important impetus came in July 2012 from a bold initiative—feed-in tariffs that promote greater renewables integration into the grid—championed by Softbank founder and now solar entrepreneur Masayoshi Son. The tariffs were set at three to four times original European levels, because the government, apparently based on 2005 foreign prices, unaccountably believed renewables cost that much in Japan, where even commodities like PV racks, cables, and junction boxes sell for about twice the world price. Some 5.2 GW of feed-in-tariff applications were approved in 2012, including 3.9 GW of non-residential solar. As prices fall, the Industry Minister is expected to start phasing down the solar feed-in tariff with a 10 percent cut this spring.

Meanwhile, though, the government changed to one more aligned with incumbent monopolists, delaying reforms. And some utilities have been exploiting a loophole that lets them unilaterally reject renewable offerings, without explanation or appeal, as risky to grid stability—so the developer gets no payment and doesn’t build.

As these internal divisions play out, the Land of the Rising Sun is getting eclipsed—making early progress despite persistent obstacles, but falling far short of its potential and others’ progress. Japan added nearly 3 GW of photovoltaics in 2012—but even less-organized Italy installed 7 GW in 2011. Similarly, Japan’s windpower association projects the same market share in 2050 that Spain achieved back in 2010.

But there are hopeful signs too. Son-san’s initiative was supported by at least 34 provincial governors. Hiroshi Mikitani, billionaire founder of the e-commerce giant Rakuten, just left the powerful traditional business forum Keidanren to form a reformist rival group. Slowly, in the subtle and complex Japanese way, business leaders’ center of gravity is shifting toward better buys and more entrepreneurial models. Real projects demonstrating renewables’ competitive advantage will speed that shift.

And if it does take hold, the world has long learned that nothing is as fast as Japanese industry taking over a sector. Nothing, that is, except its Chinese counterpart.

China Scales

China is the world’s #1 energy user and carbon emitter, accounting for 55 percent of world energy-consumption growth during 2000–2011. Yet China now also leads the world in five renewable technologies (wind, photovoltaics, small hydro, solar water heaters, and biogas) and aims to lead in all. Its solar and wind power industries have grown explosively: windpower doubled in each of five successive years. In 2012, China installed more than a third of the world’s new wind capacity and should beat 2015’s official 100 GW windpower target by more than a year.

China owns most of the world’s photovoltaic manufacturing capacity, which can produce over twice what the world installed in 2012, so the government has boosted its 2020 PV target to 50 GW to soak up the surplus in a few years. Meanwhile, overcapacity drove consolidation, with over 100 makers exiting the world market in 2012. But plunging prices plus Western innovation have made wind and solar into likely power-marketplace winners—even with incentives shrinking to zero.

As non-hydro renewables head for about 11 percent of China’s 2020 electricity generation, vigorous industrial and appliance efficiency efforts are trimming demand growth. To be sure, coal still supplies two-thirds of China’s energy and nearly four-fifths of its electricity, but its star is dimming. Two-thirds of the coal-fired power plants added in 2003–06 were apparently unauthorized by Beijing, but in 2006–10, net additions of coal-fired capacity fell by half, then kept shrinking. In 2011, investment in new coal plants fell by one-fourth to less than half its 2005 level, and China’s top five power companies—squeezed between rising coal prices and government-frozen electricity prices—lost $2.4 billion on coal-fired generation. Coal’s hidden costs, including rail bottlenecks, are becoming manifest; public opposition is rising. And now further speeding the shift from coal to efficiency and renewables is dangerously polluted air (especially in Beijing) as coal-burning mixes with the exhausts of abundant but polluting new cars. (Shanghai’s Singapore-like auction now prices a new-car license plate above a small car to put it on.) Bad air has suddenly created a powerful grassroots environmental movement.

In November 2012, the 18th Party Congress for the first time headlined a “revolution in energy production and use”—strong language from an organization founded in revolution. The incoming leaders had already earmarked major funding to explore how to accelerate China’s transition beyond coal. They clearly intend to fix what President Hu called an “unbalanced, uncoordinated, and unsustainable” development pattern and to get off coal faster. An unprecedented 2012 dip in coal-fired generation, even as the economy grew, was caused largely by a slowdown in manufacturing and strong hydro runoff, but renewables and efficiency too are starting to displace coal. China is making a real bid to be the new Germany, leading not only in making but also in applying its abundant renewable assets.

India Starts Tipping

So what about the country that—together with China—is responsible for 76 percent of the world’s planned 1.4 trillion watts of coal-fired power plants and 90 percent of the projected growth in global coal demand to 2016; that plans (implausibly) to build a coal-fired plant fleet twice as big as America’s; and that will ultimately surpass China in population, though one-fourth of its people still lack electricity?

India’s power generation is still mainly coal-fired, but India’s coal is only abundant, not cheap. Chronic coal-sector and logistics challenges have created growing import dependence (as in China, which became a net importer in 2009). That helped the six countries that control four-fifths of global coal exports to gain the market power to boost prices, so they did. Rising coal imports and a weakening currency gave India a macroeconomic headache and power producers a financial migraine.

Coal prices 2–3 times assumptions imposed a grave price/cost squeeze on two of the world’s biggest coal plants—4-GW projects owned by the largest generating firm (Tata Power, part of Tata Group that’s nearly 5 percent of India’s GDP) and by Reliance. Many plants can’t even get enough coal, exacerbating electricity shortages, but the government doesn’t want to raise electricity prices, so the dominoes are falling. In December 2011, Infrastructure Development Finance Company stopped financing new coal plants. In February 2012, the Reserve Bank of India said it wouldn’t help banks that got in trouble on new coal-plant loans. Financing dried up. Three weeks later, Tata announced its investment emphasis had shifted from coal projects to wind and solar. Led by four of India’s richest families, India shelved plans for 42 GW of coal plants in the last three quarters of 2012. That’s nearly a fourth of existing total capacity, or 68 percent of the government’s short-term target—only 32 percent of which Coal India says it can fuel. With power-hampered growth threatened by even scarcer or costlier power, some of India’s electricity leaders are seeing their way forward in superefficiency, distributed renewables, and microgrids—and not only in rural areas.

As in China, vibrant private-sector entrepreneurship in renewables should be capable of far outpacing the state-owned industries that dominate coal and nuclear power. India, the world’s #3 windpower market, has already installed nearly four times more wind than nuclear capacity. Solar power too added 1 GW in 2012 and is taking off briskly. And of course India has huge efficiency opportunities because most of its ultimate infrastructure isn’t yet built. Projects like Infosys’s 70-percent-less-energy-using new offices in Bangalore—helped by ASHRAE Fellow and RMI Senior Fellow Peter Rumsey—are gaining wide attention. A constellation of impressive new nongovernmental efforts with businesses and governmental allies is starting to focus India’s energy policy reforms and business mobilizations.

In all, India seems to be at an energy tipping point. It is starting to comprehend its massive efficiency and renewable potential, and enjoys growing private-sector skills to capture that potential, especially if regulatory barriers can be removed. All of this as the power sector—chastened by the world-record summer 2012 blackout of 600 million people—starts to face the need for serious reforms. The main missing elements, as in China and Japan, include rewarding utilities for cutting your bill (instead of selling you more energy), and allowing demand-side resources to compete in supply-side bidding.

Of course, there are major challenges in modernizing a sprawling, disjointed sector with irregular management quality and transparency. But with so many brilliant entrepreneurs and engineers, important advances are already emerging from the bottom up at the firm, municipal, and state levels, whether led or followed by national policy. And as we’ll see in the second part of this blog, all three of Asia’s economic giants will be increasingly informed and perhaps inspired by the example of their European counterpart and prime competitor, Germany, which is now switching to a green electricity system faster than anyone thought possible.

Source: http://blog.rmi.org

Asia makes a claim in LOW carbon competitiveness and climate research

A low-carbon economy entails not just a sustainable use of energy, from low-carbon sources, but also low-carbon exports. The Low-Carbon Competitiveness Index, released by the Climate Institute, measures the ability of G20 nations to flourish in a low-carbon economy, which shows East Asia overtaking Europe and the United States in climate change-targeting actions. Also increasing its preparedness in the face of climate change is Singapore with the opening of the new Centre for Climate Research which aims to better predict weather patterns in its tropical climate. Read more

Asia leads on climate action

Eco News Byron Bay Canberra [AAP]:

China now earns as much from selling solar panels as it does from shoes, as Asia’s emerging economies prepare to prosper in a future that limits carbon emissions, a report says.

A new index measuring the ability of G20 nations to flourish in a low-carbon economy shows East Asia has taken over from Europe and the United States when it comes to action on climate change.

Japan, China and South Korea took out three of the top five spots on the Low-Carbon Competitiveness Index, released on Tuesday as part of the Climate Institute’s report on global climate action.

France leads the way, largely on the back of its low-emission nuclear energy sector, followed by Japan, China, South Korea and Great Britain.

Australia, though making slight improvements, languishes in 17th place and has been overtaken by Indonesia in its readiness for a low-carbon future.

The data is from 2010 and doesn’t include the impact of the federal government’s clean energy laws like the carbon price, but does include significant world events like the global recession.

The head of the Climate Institute, John Connor, says as other nations put constraints on carbon and pursue economic gains with less pollution, Australia may be left behind if it opts out of real action.

‘We could become stranded trying to sell something that is no longer of interest,’ he told AAP.

‘If it’s not seen to be doing its fair share, it could suffer both diplomatically and economically.’

Mr Connor said Australia had significant ‘lead in our saddlebags’, running a high-carbon economy in terms of both energy usage and exports.

Investment in clean energy, one measure of low-carbon preparedness, had stalled in Australia with industry uncertain about the future of the Renewable Energy Target (RET), he said.

Clean energy investments in Asia, meanwhile, hit $270 billion in 2012, while China earned $36 billion selling solar panels – about what it made from its traditional market in shoes.

Mr Connor said China was pushing for an emissions trading scheme and had indicated it wanted to rein in its coal consumption, in part to combat air pollution.

Many nations weren’t driven to take action on climate change for green reasons, but were motivated by a range of self-interest matters like energy security and productivity growth.

But even taking current efforts into account, the world was still on track to a global temperature rise above two degrees Celsius by 2050, a rate accepted by most nations as dangerous.

For this reason, Mr Connor said shifting to a carbon-constrained future where nations tried to get the most possible from a tonne of CO2 wasn’t going to be easy.

‘Australia, by virtue of its place (on the index), will be one of the ones which will suffer the most if we don’t really double down on low-carbon improvements,’ he said.

http://echonetdaily.echo.net.au

More reliable forecasts with new climate centre?

Research centre will focus on Singapore’s tropical conditions

By Grace Chua in The Straits Times (27 March 2013):

IS IT possible to predict monsoon storms more accurately? How will climate change affect rainfall in Singapore?

The new Centre for Climate Research, which opened officially yesterday, will tackle these questions, before advising agencies on managing water resources and flood risks, for example.

The centre, which is part of the National Environment Agency’s Meteorological Service, will be led by senior British researcher Chris Gordon, the former head of the UK Met Office’s Hadley Centre, Britain’s climate research arm.

One of its first priorities will be to work on Singapore’s second climate-change vulnerability study, the first phase of which is expected to be done by late 2014, said Dr Gordon, who begins as director on April 15.

It will use the latest climate models to update the first such study, started in 2007, to improve the reliability of predictions.

The centre will also study poorly understood tropical weather systems which have unique features such as thunderstorms caused by convection – hot moist air rising and forming clouds.

The centre, located in Paya Lebar, hopes to produce seasonal weather forecasts. For example, while February is normally warm and dry, a monsoon surge made last month exceptionally wet. Researchers hope to predict such unusual patterns ahead of time.

“The single biggest issue is to explain uncertainty in a way that doesn’t cause people to lose confidence. People don’t want a range of outcomes – they want the outcome,” Dr Gordon said.

The centre, which will cost between $7 million and $8 million a year to run and have a staff of 25, is part of national plans to build climate science capabilities, and focus on Singapore’s tropical climate.

It was first mooted in 2011, a year after intense rain caused flash floods across the island, including the Orchard Road shopping district.

The director-general of the Meteorological Service, Ms Wong Chin Ling, said: “There is a common misconception that climate change and environmental issues are a problem for the distant future.

“The reality is that preparedness must begin in the present.”

The mother of all disruptive technologies: Carbon by another name

Graphene – carbon by another name – is the latest wonder material. Ever since its discovery in 2004, billions of research dollars have been poured into it, with Singapore institutions amongst the world’s top research centers. Touted for its light, yet strong properties, graphene can find utilisation in applications ranging from bendable display screens and light bulb-less lighting, to smaller yet more powerful microprocessors. Titled the ‘Godfather of Graphene’, Prof. Antônio Helio de Castro Neto envisions graphene to be the mother of all disruptive technologies. Read more

By Chang Ai-lien Senior Correspondent Sunday Times Singapore (24 March 2013):

“Many of us have been working with graphene since it was discovered in 2004″, said NUS dean of science Andrew Wee. “We recognised the potential early, put in a proposal for a centre and it took off. In science you have to do things early because after a few years everybody is doing it.”

The effort is paying off, he points out: NUS and NTU are respectively ranked second and third worldwide in terms of graphene publications, behind only the Chinese Academy of Sciences – a multi-institutional organisation, and ahead of the likes of the MIT and the Russian Academy of Sciences. Ranked by country, Singapore is No. 7 worldwide.

Now, the main player here – the $40 million Graphene Research Centre at NUS which began operations in 2010 – has 26 principal investigators plus many PhD students and research fellows, and is involved in research funding to the order of $100 million, and 50 patent applications.

Its director, Prof Castro Neto, said: “We have some of the best people in the world, and the best equipment for the complete innovation cycle; from synthesising the material to making the devices. We have everything in place to be the best in the world.”

Source: www.stasiareport.com

All Voices

Hangzhou : China (23 March 2013):

Chinese researchers claim to have created the lightest material ever made, so light in fact that the material, as demonstrated by the researchers, could be balanced on the petals of a flower.

Building upon the existing substance known as graphene, which is itself considered to be the lightest material in the world, the researchers from Zhejiang university in Hangzhou, China, combined the ‘aerogel’ with freeze-dried carbon, to create the new solid substance, weighing only 0.16 miligrams per cubic centimetre. At this weight, the new carbon/graphene mix gives it twice the density of hydrogen but less dense than helium. The Zhejiang researchers demonstrated the new wonder substance’s feather weight abilities by balancing a piece of it on a cherry blossom flower.

But what makes graphene such a revolutionary substance is not its density but its strength , with a one-square-metre sheet weighing only 0.77 milligrams being strong enough to support the weight of a 4 kilogram cat. In addition to this, graphene is also the thinnest material ever made, being very flexible, a very good conductor of electricity and a very good filter.

Source: www.allvoices.com

23 March 2013:

Graphene Godfather’ makes a disruptive Brazilian play

Science in Brazil

Brazilian scientist spanning three continents: Prof. Antônio Helio de Castro Neto

One scientist has earned his right to the title ‘Godfather of Graphene.’

This miracle carbon substance is now attracting tens of billions of dollars in research funding, both public and private, as countries engage in a new arms race to develop technologies that promise to consign today’s micro-electronics and display technology to the scrapheap.

Before long, elegant graphene-backed bendable screens will make the ubiquitous star-cracked glass on today’s iPads, iPhones and other brittle hand-helds into just a distant nightmare. Graphene fibers could turbocharge today’s over-burdened internet backbone and produce “Star Trek” lighting that does not require light bulbs.

Today the international conference circuit discussing graphene, or graphite exfoliated into single-atom thin sheets, mobilises thousands of nanotechnology researchers. But less than a decade ago, when the original interest group met on the fringes of the American Physical Society, its members could fit comfortably into a single SUV.

And the scientist, who back in 2004 might have been driving that very same SUV, is an engaging 48 year-old Brazilian with scientific collaborations in three continents, named Antônio Helio de Castro Neto.

Prof. Castro Neto didn’t himself write the book on graphene alone – but for a decade he has played the role of a world leader in this new area of science and technology. For instance, when the Nobel committee was considering in 2010 whether to award a Physics Prize to Russians Andre Geim and Konstantin Novoselov of Manchester University, it’s reported that Prof. Castro Neto played an important role in informing the Nobel committee.

The physicist won’t confirm this, but he was certainly an honored guest at the Stockholm ceremony. And he remains close friends with the two laureates (now Professor Sir Andre Geim and Professor Sir Konstantin Novoselov), whom he’d helped to understand the electronic properties of the new substance when the Russians were drafting their original 2005 paper about the creation of the first experimental graphene devices. And Geim and Novoselov’s names have since appeared with Castro Neto’s on a number of important papers. His resume shows that ever since the 1990s he’s been a hyper-energetic writer of papers and organizer of nanotechnology conferences. He’s even at home in TV broadcasting.

Prof. Castro Neto is a passionate advocate of graphene, which he describes as “strong as a diamond, with great stiffness – but at the same time it’s extremely soft, so you can deform it like plastic.” Graphene’s three key characteristics, says Castro Neto, are: “it’s one atom thick; it conducts electricity extremely well at room temperature; and it’s completely transparent. That means the number of applications for graphene is only limited by your imagination.”

Graphene, in fact, could prove to be the mother of all disruptive technologies.

Before long indium tin oxide or ITO, today used in all the world’s touch screens, could go the way of the Dodo. Rare metals now used in electronics such as indium, which sells for up to US$600 a kilo, will become redundant as pollution-free graphene sourced from feedstock like ethanol “changes the environmental dynamics of the electronics industry.”

One commonly-held misconception is that graphene will eventually replace silicon, the material from which every microchip is made. While silicon is a semiconductor, graphene has always-on conductive properties better than copper. “It won’t ever replace silicon because you can’t turn off the electric current,” says Castro Neto.

Yet, unlike silicon compounds, graphene is completely stable and will never degrade. And, affirms Castro Neto, because a single gram of graphene can cover an area of 2,600 square meters, it will revolutionize optical applications such as displays, touch screens, and the world of hand-held devices. Journalists love citing the claim that a sheet of graphene could only be broken by a force equivalent to an elephant standing on a pencil-point.

What’s clear is that this engaging professor doesn’t simply know his science. He has invaluable strategic knowledge of one of the world’s most frenzied research areas. He knows whose research is hot, and who’s come to the game too late. He knows which multinationals are bogged down in the search for high-volume graphene synthesis that will unlock commercial applications.

Above all, he knows exactly how small, nimble laboratories from developing nations could yet leap-frog these big-spending giants to snatch elusive prizes from under the noses of the world’s premier research establishments or its secretive multinationals.

So when it was announced in 2010 that Castro Neto, a Professor at Boston University’s Department of Physics, was also taking up the post of Director of Singapore National University’s Graphene Research Centre, that turned some scientific heads. Singapore has since become a world center of excellence for graphene.

More heads turned too in 2013, when Castro Neto added a third research title to his name by taking up a visiting professorship in Brazil, at São Paulo’s Mackenzie Presbyterian University. Mackenzie’s dean, Benedito Guimarães Aguiar Neto, is developing a center of excellence specialized in graphene-based photonics called MackGrafe (Centro Mackenzie de Pesquisas Avançadas em Grafeno e Nanomateriais).

While Mackenzie may be a proficient engineering university with a solid reputation, it’s not exactly a world-class institution and it’s outside the charmed circle of Brazilian state and federal universities that consume the lion’s share of national research budgets. Castro Neto does have strong links to UFMG, the federal university of Minas Gerais state, and UNICAMP, the state university of Campinas, but it appears he’s focusing his attention in Mackenzie.

Mackenzie University does have access to sizable budgets from São Paulo State and its funding agency, FAPESP (São Paulo Research Foundation). But compared to the European Union’s EUR 1 billion Future and Emerging Technologies (FET) program, or the Korean industrial giant Samsung’s EUR 5 billion annual research budget, Mackenzie’s resources are tiny, with just a US$ 20 million investment.

Let’s not forget the odds are stacked against those unable to spend big in the Great Graphene Race. Right now, national graphene establishments are a bit like research bases scattered across Antarctica. Every country needs to put its flag on the map, so it can claim a share of any future bonanza.

The UK, although it hosts two Nobel Prize winners, only decided to invest a modest EUR 24 million in the field in late 2012. Today it has just 54 patents and is widely regarded as a “latecomer” to a race dominated by Asian giants. Of the 7,351 graphene patents and applications worldwide by the end of 2012, Chinese institutions and corporations top the list with 2,200, while the US is second with 1,754 patents, its champions being US multinational SanDisk, with the Massachusetts Institute of Technology (Harvard MIT).

So what exactly is Prof Castro Neto doing with Brazil, and how did Mackenzie have the good fortune to land such a big catch?

Firstly, Castro Neto is himself a Brazilian. He may have worked overseas for two decades, but he was attracted by a 3-year visiting professorship to his home country. By joining forces with Singapore, he feels the two universities could punch above their weight in the field of opto-electronics, such as OLEDs (organic light emitting diodes).

Secondly, there’s no doubt the academic is attracted by a David and Goliath narrative, in which Brazil — a nation with no domestic electronics industry of its own — has the ambition and vision to use research to develop a completely new economic activity. There is, he says, an opportunity to develop industries from scratch to secure a new technology future.

“We have an opportunity to do something really ‘out of the box’ here,” says Castro Neto. “And my knowledge helps. I know the field so well I can suggest the niches where Mackenzie can score some goals. We’re looking for small things with huge payoffs.”

The focus on optical solutions means Mackenzie’s graphene research center team led by Dr Eunézio Antonio de Souza will, with Castro Neto’s support, work on transforming concepts into potential products – while avoiding duplicating research being carried out elsewhere.

Already there’s talk of reducing download times with the help of a high-speed, next-generation solution to the fiber optic wires that carry the world’s internet signals. And developing flexible non-glass interfaces for handheld devices that will make today’s tablets look and feel like tombstones. Mackenzie does have track record in developing digital systems for communications and TV. The Brazilian unit will also be working with graphene-based lasers.

It’s an ambitious scenario, that Brazil could develop both the intellectual property around graphene and a whole new industry to exploit it. However, to achieve the goal of building a ‘Graphene Valley’ in downtown São Paulo, something more than academic rigor will be needed.

Perhaps it’s no coincidence then, that Prof Castro Neto and those backing graphene research in Brazil have avoided the stuffy world of federally-funded research universities. At these academies, patrician scientists tend to favor lifetime state appointments, shunning commercial opportunities for a self-referential world of teaching and conferences. “Brazil has an inbuilt concept of ‘pure science’ and academic rigor; but there’s not enough applied science,” says Castro Neto. “I believe there’s only good science and bad science.”

Certainly, Brazil’s shocking paucity of patents, and the genteel poverty of many of its academics, is a world away from rockstar academic culture in the US or the UK. Brazilian professors just don’t write popscience books or present popular TV shows – and few have any entrepreneurial instincts.

So importing a hard-driving Menlo Park ethos will be necessary to realize any dream of a national graphene industry. Perhaps that’s why Mackenzie is planning to hire foreign researchers. “We will need to introduce the notion of the scientist-entrepreneur from scratch in Brazil,” concedes Castro Neto.

So the ‘Godfather of Graphene’ is taking on a series of ambitious challenges.

He’s running a triangular research career in three continents; he’s seeking to show that highly-focused scientists working together in developing countries stand a chance of beating Big Research at its own game; and he’s trying to introduce the concept of the scientist-entrepreneurs to Brazil.

Luckily, he has an ‘atom-thin’ miracle substance to help him do all these things. And if graphene fulfils its promise of being a truly disruptive technology, there’s no reason why these ambitions should not succeed.

Source: www.scienceforbrazil.com and www.graphene.nus.edu.sg

The economies of scale and the environmental cost of energy not even

Mid-day on the 24th of March was a significant time for Germany. At that moment, more than half of its electricity output came from renewable sources – wind and solar. With plans to double production within the next decade, Germany could soon be mostly powered by renewable sources, with fossil fuel power plants acting only as backups. However, not all renewable sources are created equal. Environmental impact of some sources – like wind and hydro – will have to be balanced against gains. Read more

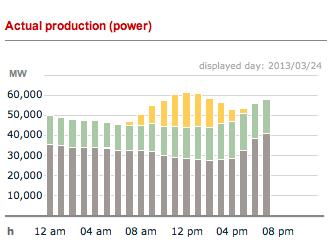

Graph of the Day: Wind, solar provide half Germany’s energy output

By Giles Parkinson in Reneweconomy (25 March 2013):

Today’s Graph of the Day will tell a story that will be repeated more regularly in coming months and years – the growing impact of solar and wind energy in countries such as Germany.

This comes from Sunday (March 24) and shows that in the middle of the day, more than half of Germany’s electricity output came from wind and solar. Two things are striking – one is the amount of solar capacity produced on a day in early spring, with nearly 20GW at its peak. The second is the consistent contribution of wind energy, which accounted for more than 25 per cent of the overall output throughout the day.

Imagine, then, what will happen when Germany doubles the amount of wind and solar production, as it plans to do within the next decade. On days like this, there will simply be no room for fossil fuel production – the so-called “base load”. Any coal or gas fired generation that remains will need to be capable of being switched on and off on demand. The base load/peakload model will be turned on its head – to be replaced by dispatchable and non dispatchable generation. Fossil fuels will be required just to fill in the gaps.

The original graph can be found here. Notice, too, the difference between what was delivered by wind and solar, and what was planned (graph below). There’s little difference. For all the talk about “intermittent” renewables, their output is actually quite predictable – more so than swings in demand ever were.

Source: www.reneweconomy.com.au

The Price of Green Energy: Is Germany Killing the Environment to Save It?

By SPIEGEL Staff

The German government is carrying out a rapid expansion of renewable energies like wind, solar and biogas, yet the process is taking a toll on nature conservation. The issue is causing a rift in the environmental movement, pitting “green energy” supporters against ecologists.

The Bagpipe, a woody knoll in northern Hesse, can only be recommended to hikers with reservations. This here is lumberjack country. Broad, clear-cut lanes crisscross the area. The tracks of heavy vehicles can be seen in the snow. And there is a vast clearing full of the stumps of recently felled trees.

Martin Kaiser, a forest expert with Greenpeace, gets up on a thick stump and points in a circle. “Mighty, old beech trees used to stand all over here,” he says. Now the branches of the felled giants lie in large piles on the ground. Here and there, lone bare-branch survivors project into the sky.

Kaiser says this is “a climate-policy disaster” and estimates that this clear-cutting alone will release more than 1,000 metric tons of carbon dioxide into the atmosphere. Forests are important for lowering levels of greenhouse gases, as large quantities of carbon dioxide are trapped in wood — especially the wood of ancient beech trees like these. Less than two years ago, UNESCO added the “Ancient Beech Forests of Germany” to its list of World Natural Heritage Sites.

It wasn’t any private forest magnate who cleared these woods out. Rather, it was Hessen-Forst, a forestry company owned by the western German state of Hesse. For some years now, wood has enjoyed a reputation for being an excellent source of energy — one that is eco-friendly and presumably climate neutral. At the moment, more than half of the lumber felled in Germany makes into way into biomass power plants or wood-pellet heating systems. The result has been an increase in prices for wood and the related profit expectations. The prospect of making a quick buck, Kaiser says, “has led to a downright brutalization of the forestry business.”

The Costs of Going Green

One would assume that ecology and the Energiewende, Germany’s plans to phase out nuclear energy and increase its reliance on renewable sources, were natural allies. But in reality, the two goals have been coming into greater and greater conflict. “With the use of wood, especially,” Kaiser says, “the limits of sustainability have already been exceeded several times.” To understand what this really means, one needs to know Kaiser’s background: For several years, he has been the head of the climate division at Greenpeace Germany’s headquarters in Hamburg.

Things have changed in Germany since Chancellor Angela Merkel’s government launched its energy transition policy in June 2011, prompted by the Fukushima nuclear power plant catastrophe in Japan. The decision to hastily shut down all German nuclear power plants by 2022 has shifted the political fronts. Old coalitions have been shattered and replaced by new ones. In an ironic twist, members of the environmentalist Green Party have suddenly mutated into advocates of an unprecedented industrialization of large areas of land, while Merkel’s conservative Christian Democrats have been advocating for more measures to protect nature.

Merkel’s energy policies have driven a deep wedge into the environmental movement. While it celebrates the success of renewable energies as one of its greatest victories, it is profoundly unsettled by the effects of the energy transition, which can be seen everywhere across the country.

Indeed, this is not just about cleared forests. Grasslands and fields are being transformed into oceans of energy-producing corn that stretch beyond the horizon. Farmers are using digestate, a by-product of biogas production, to fertilize their fields as soon as they thaw from the winter. And entire tracts of land are being put to industrial use — converted into enormous solar power plants, wind farms or highways of power lines, which will soon stretch from northern to southern Germany.

The public discourse about the energy transition plan is still dominated by its supporters, including many environmentalists who want to see the expansion of renewable energies at any price. They set the tone in government agencies, functioning as advisors to renewable energy firms and policymakers alike. But then there are those feeling increasingly uncomfortable with the way things are going. Out of fear of environmental destruction, they no longer want to remain silent.

Greens in Awkward Position

Although this conflict touches all political parties, none is more affected than the Greens. Since the party’s founding in 1980, it has championed a nuclear phaseout and fought for clean energy. But now that this phaseout is underway, the Greens are realizing a large part of their dream — the utopian idea of a society operating on “good” power — is vanishing into thin air. Green energy, they have found, comes at an enormous cost. And the environment will also pay a price if things keep going as they have been.

Within the Greens’ parliamentary group in the Bundestag, politicians focused on energy policy are facing off against those who champion environmental conservation, fighting over how much support the party should throw behind Merkel’s energy transition. Those who prioritize the environment face a stiff challenge, given that Jürgen Trittin — co-chairman of the parliamentary group who long served as environment minister — is clearly more concerned with energy issues.

In debates, members of the pro-environmental camp have occasionally even been hissed at for supposedly playing into the hands of the nuclear lobby. “We should overcome the temptation to sacrifice environmental protection for the sake of fighting climate change,” says Undine Kurth, a Green parliamentarian from the eastern city of Magdeburg. “Preserving a stable natural environment is just as important.”

“Of course there is friction between environment and climate protection advocates, even in my party,” says Robert Habeck, a leader of the Greens in the northern state of Schleswig-Holstein who became its “Energiewende minister” in June 2012 — the first person in Germany to hold that title. “We Greens have suddenly also become an infrastructure party that pushes energy projects forward, while on the other side the classic CDU clientele is taking to the barricades. It’s just like it was 30 years ago, only with reversed roles.”

This role is an unfamiliar one for environmentalists. For a long time, they were the good guys, and the others were the bad guys. But now they’re suddenly on the defensive. They used to be the ones who stood before administrative courts to fight highway and railway projects to protect Northern Shoveler ducks, Great Bustards or rare frog species. But now they are forced to defend massive high-voltage power lines while being careful not to scare off their core environmentalist clientele.

Bärbel Höhn, a former environment minister of the western state of North Rhine-Westphalia, has a reputation for being a bridge-builder between the blocs. She concedes that there have been mistakes, like with using corn for energy. But these are just teething problems that must be overcome, she adds reassuringly.

Encroaching on Nature Reserves

The opposition in Berlin has so far contented itself with criticizing Merkel, believing that her climate policies have failed and that she has steered Germany’s most important infrastructure project into a wall. Granted, neither the center-left Social Democratic Party (SPD) nor the Greens are part of the ruling coalition at the federal level, but they do jointly govern a number of Germany’s 16 federal states. And, when forced to choose between nature and renewable energies, it is usually nature that take a back seat in those states.

It was in this way that, in 2009, Germany’s largest solar park to date arose right in the middle of the Lieberoser Heide, a bird sanctuary about a 100 kilometers (62 miles) southeast of Berlin. Since German reunification in 1990, more than 200 endangered species have settled in the former military training grounds. But that didn’t seem to matter. In spite of all the protests by environmentalists, huge areas of ancient pine trees were clear cut in order to make room for solar collectors bigger than soccer fields.

A similar thing happened in Baden-Württemberg, even though the southwestern state has been led for almost two years by Winfried Kretschmann, the first state governor in Germany belonging to the Green Party. In 2012, it was the Greens there who passed a wind-energy decree that aims to boost the number of wind turbines in the state from 400 to roughly 2,500 by 2020. And in the party’s reckoning, nature is standing in the way.

The decree includes an exemption that makes it easier to erect huge windmills in nature conservations areas, where they are otherwise forbidden. But now this exception threatens to become the rule: In many regions of the state, including Stuttgart, Esslingen and Göppingen, district administrators are reporting that they plan to permit wind farms to be erected in several nature reserves.

But apparently even that isn’t enough for Claus Schmiedel, the SPD leader in the state parliament. Two weeks ago, he wrote a letter to Kretschmann recommending that he put the bothersome conservationists back into line. Schmiedel claimed that investors in renewable energies were being “serially harassed by the low-level regional nature-conservation authorities” — and complained that the state government wasn’t doing enough to combat this.

Fears of Magnetic Fields

Just as controversial as the wind farms are the massive electricity masts of the power lines, which bring wind energy from the north to large urban areas in the south. This has led the Greens to favor cables laid underground over the huge overhead lines for some time now. Trittin, the party’s co-leader, believes that using buried cables offers an opportunity “to expand the grid with the backing of the people.”

Ironically, however, there is growing resistance to this supposedly eco- and citizen-friendly form of power transition on the western edge of Göttingen, a university town in central Germany that lies in Trittin’s electoral district.

Harald Wiedemann, of the local citizens’ initiative opposed to underground cables, has already sent to the printers a poster that reads: “Stop! You are now leaving the radiation-free sector.” Plans call for laying 12 cables as thick as an arm 1.5 meters (5 feet) below ground. Wiedemann warns that the planned high-voltage lines will create dangerous magnetic fields.

He and some other locals have marked out the planned course of the lines with barrier tape. It veers away from the highway north of the village, cuts through the fields, runs right next to an elementary school and through a drinking water protection area.

Wiedemann is also the head of the city organization of the Greens, who are generally known as Energiewende backers. “But why do things have to be done so slapdash?” he asks. The planning seems “fragmented,” he says, and those behind them have forgotten “nature conservation, health and agriculture.”

Indeed, underground cables are anything but gentle on the landscape. Twelve thick metal cables laid out in a path 20 meters wide are required to transmit 380,000 volts. No trees are allowed to grow above this strip lest the roots interfere with the cables. The cables warm the earth, and the magnetic fields created by the alternating current power cables also terrify many.

Nature Suffers

Many nature conservationists believe that Germany’s Energiewende is throwing the baby out with the bath water. For example, last week, Germany’s Federal Agency for Nature Conservation (BfN) hosted a meeting of scientists and representatives from nature conservation organizations and energy associations in the eastern city of Leipzig.